Tuesday, December 9, 2008

Finding the Parent Path

select a.spec_id, a.sys_parent1, a.classname,

(select b.fullpath from tridata.t_spaceclasscurrent b where b.spec_id = a.sys_parent1) "PARENTPATH"

from tridata.t_spaceclasscurrent a

order by a.fullpath

Thursday, November 6, 2008

Business Connect

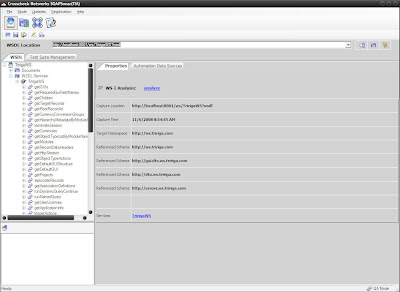

To use the product, enter your WSDL location (i.e., http://yourServerName:8001/ws/TririgaWS?wsdl) and click on the Capture WSDL button. You will see a screen that looks like this:

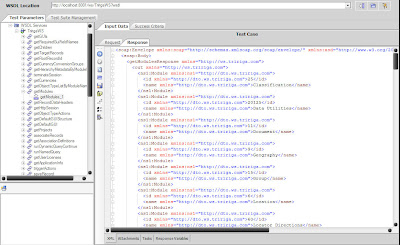

To execute a method use the tree on the left to drill into it. Once the method is selected look at the schema fields on the request, enter any required data (depending on the method you are calling), set the authentication to use your API user, and click execute.

In the example below, I executed getModules which has no required input. The screen show shows the response from the server.

Monday, September 8, 2008

Add a Documents Tab to a Custom Object

• In the Data Modeler, create an association between your object and the Document module, Document object using an association string of Has Document with a reverse string of Is Document For

• Revise and republish the business object

• Open the GUI Builder and add a xxDocuments tab

• Under your new tab create a query section called xxRelatedDocuments using Document - Display - Associated to Current Record as the query, setting the Association Type to Has Document

• Re-label the DeAssociate action to Remove

• Add a new action called Upload, check the Pop Up box, set the Action Type to Custom and enter this URL: /html/en/default/docmgmt/objectupload/objectFrameSet.jsp?folderId=-1&showFolders=true&multiFile=true

• Publish your GUI

Creating a System tab on a Custom Object

Add the following fields to your business object using Find:

- triLanguageLI

- triFormLabelSY

- triBusinessObjectLabelSY

- triRecordNameSY

- triProjectNameSY

- triRecordStateSY

- triCreatedSY

- triModifiedByTX

- triModifiedSY

I also like to include spec_id which is:

triRecordIdSY

For the Modified By field to work, you will have to perform a few additional steps:

- Create a new association to the triPeople module, triPeople business object with an association string of Auto Recorded By and a reverse association of Auto Recorded.

- Set the triModifiedByTX field to be a locator field. Locate using triPeople, Auto Recorded By and map to triNameTX

- If your object has a sub action that calls xxBusinessObjectName - Synchronous - Permanent Save Validation, it will call the xxBusinessObjectName - Synchronous - Module level business rules workflow which will update the Modified By field. If not, you will need to write workflows to update this field on any given state transition

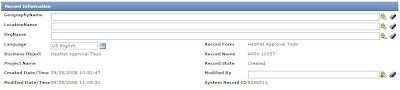

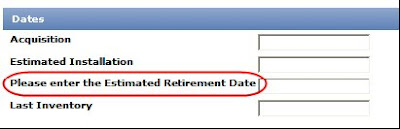

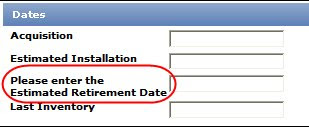

Publish the Business Object then open the GUI builder. Create a new tab called xxSystem and a new section under that tab called xxRecordInformation. Add the above fields to the layout as shown below and Publish the GUI.

If you are using triModifiedByTX, don’t forget to include a locator query on the Modified By field.

Tuesday, August 12, 2008

How to view long-running SQL Processes with Oracle 10g Enterprise Manager

To see the log running queries:

- Open the Oracle Enterprise Manager 10g Web-interface (typically found at http://DBServer:1158/em/console)

- Click on the Performance Tab

- At the bottom of the page, there is a Top Activity link

- In that page, there will be a Top SQL link. If you click on the SQL ID, it will bring up the statement that was executed.

- If it is a select, there will be a Plan tab, it will show how expensive the statement is.

- If it looks like a TRIRIGA query, either coming from a manager, portal, or GUI query section, the query should be refactored and/or redesigned to allow for better performance.

- The report can normally be easily identified by the tables or Module-views it selects from, so for example, if the statement is question looks like:

SELECT ROW1, ROW2, ROW3

FROM T_TRIBUSINESSOBJECT

WHERE ROW1 like ':1'

- Look in the report manager for a report/query/graph, etc, that is looking at the TRIBusinessObject BO.

- Test different reports by opening the report, and clicking the "Export SQL" link. The sql can be verified against what Oracle is reporting back

Gathering Database Statistics

EXECUTE DBMS_STATS.GATHER_SCHEMA_STATS('TRIDATA',DBMS_STATS.AUTO_SAMPLE_SIZE);

If 2.1.9.X is being used, also run:

EXECUTE DBMS_STATS.GATHER_SCHEMA_STATS('TRIRIGA',DBMS_STATS.AUTO_SAMPLE_SIZE);

Thursday, July 10, 2008

Capturing Traffic Between the App and Database Servers

1) Stop the application server.

Destination Host: database server

Destination Port: database port (typically 1521 for Oracle, 1433 for SQL server)

Note that technically, you don't have to establish the SSH tunnel directly to the application server; any SSH server will work (i.e. a Unix box completely unrelated to the application system). You would need to ensure the source end of the tunnel on the SSH server accepts connections from other hosts (typically an option in the tunnel configuration settings); you would then point the application server to that box instead of 127.0.0.1.

Once you have the capture, there are a variety of tools Wireshark provides to analyze the capture. For example, select Statistics -> Conversations from the Wireshark menu and click the "TCP" tab; this will show all TCP connections active during the capture period. You can start examining these by selecting an entry from the list, right clicking, and selecting "Apply as Filter -> Selected -> A<->B" from the popup menu. This will filter the packet window to display only that connection. You can also view or save all the traffic for a connection as text by selecting a packet in the list and choosing "Follow TCP Stream". For many protocols, Wireshark includes dissectors which will parse out the traffic and present it semantically (for example, it can decode HTTP traffic and display request/response information). I don't believe there is currently a dissector for Oracle, but you can view the raw TCP streams to see the SQL statements going over the wire

Monday, July 7, 2008

Identifying Linked Excel Cells

Excel linked cells are stored in the following Table ‘SOBJTYPE_EXCEL_PARAMS’. The spec_template_id column is actually the GUI_ID that the excel template is embedded in, not the business object’s spec_template_id. To look up the GUI_ID look up the GUI name in the following Table ‘GUI_FORMS’. This will give you the spec_template_id to use when finding all the excel links.

Use this script and update the spec_template_id to match the GUI you need to pull the cell links from. In this example it is the ‘triRETransactionLeaseTerms’ GUI.

Select EXCEL_ROW, EXCEL_COL, PARAM_ID, PARAM_STR, PARAM_DISP_STR, ROW_NUM TABLE_VIEW_FLAG from SOBJTYPE_EXCEL_PARAMS where spec_template_id = 10003842;

Thursday, June 26, 2008

Capturing HTML Packets

Download and install Wireshark from here:

http://prdownloads.sourceforge.net/wireshark/wireshark-setup-1.0.0.exe

After you get it installed, start it up; under the menu go to "Capture -> Interfaces". It should pop up a dialog showing all the network interfaces on the box; generate some traffic (open a web page etc.) and the "Packets" column should start counting up. That's the interface you want to sniff. From that dialog, click the "Options" button next to the interface that you identified. In the "Capture Filter" field of the options dialog, enter the following:

host ServerName && (port 80 || port 443 || port 8080 || port 8443 || port 8001)

(assuming ServerName is the server you want to monitor). Then click start. Go perform whatever operation you need to trace. Then select "Capture -> Stop" from the menu when it's done. Then save the result (pcap format should be the default, which is correct).

Wednesday, June 18, 2008

Multi-Line Field Labels

To solve this issue an break sequence can be added to split the label to multiple lines as shown below.

Here are the steps:

- In tririgaweb.properties, remove "<", ">" and "&" from the EXCLUDE_CHARACTERS line. It should look like this: EXCLUDE_CHARACTERS={

- Save tririgaweb.properties. Make the same changes on your process server, if applicable

- Restart Jboss, Weblogic or WebShpere on all app and process servers

- In the GUI Builder, Revise the GUI, select the field and edit the label as shown below by inserting a break sequence where you want to split the label

- Apply the changes and Publish the GUI

Tuesday, May 6, 2008

Change the Browser Title

Thursday, May 1, 2008

Setting up Flexible Menus

Setting these up is not very difficult, and to make it just a little easier a colleague of mine put together a document describing exactly how to do it. Click here to download the PDF document.

Setting these up is not very difficult, and to make it just a little easier a colleague of mine put together a document describing exactly how to do it. Click here to download the PDF document. Thanks Srinath!

Troubleshooting System Performance

- Make sure your hardware is appropriately sized. TRIRIGA will typically help you with this. You just need to provide them the details of how you use (or anticipate to use) the system, such as number of users, average number of new objects you add per day, number of objects in the system etc.

- Verify that your database and application/process servers have sufficient memory allocated to them. Make sure you have sufficient memory on your severs to support the values you use

- Optimize portal performance by removing as many queries as possible from portals and eliminate or at least cut back on the use of auto-refresh.

- Upgrade to a current platform release – TRIRIGA is constantly improving the platform. Check the release notes for all of the more current platforms to determine if any new enhancements can help you.

- Try to determine if the performance issues are related to the database or to process/app servers. Check memory & CPU usage to help determine this

- Have a DBA capture long running SQL queries by running Statspack, and try to determine where they originate in the system. Many come from queries within the system that can be tuned to perform better. I have a post on my blog about this: http://www.tririga.info/2008

/01/analyzing-long-running-sql .-queries.html - Disable any unnecessary workflows. For example, if you don't use out of box approvals or notifications you can bypass a lot of system overhead by disabling these workflows, even on a per-module basis. (Be careful here if you are not familiar with workflows)

- Check the database for disproportionate table sizes. I had a customer with 250 million rows in IBS_SPEC_ASSIGNMENTS and only 10 million rows in IBS_SPEC

- Get a count of all objects in the database to be sure what you have is what you expect; you may find yourself with 250k discussion threads that you never use. Here is an article in my blog on how to do this: http://www.tririga.info/2008/01/get-count-of-all-objects-in-trirga-9.html

- Try to isolate specific actions that perform poorly, like creating a particular type of record. Analyze what the system does internally when these actions occur. Try to determine if a specific workflow or query is causing the problem and work to fix it.

If you have anything to add to this list, please let email me and let me know.

Thursday, April 24, 2008

Find Corresponding Object and Module Names

/* Get BO and corresponding Module Names - run in tririga schema */

SELECT b.NAME AS bo_name, m.module_name

FROM module_view_map m, ibs_spec_type b

WHERE m.module_id = b.spec_class_type

ORDER BY NAME

Tuesday, April 22, 2008

Utility for Viewing Configuration and Log Files

Monday, April 21, 2008

Locator Fields and Object Metadata Cache

Wednesday, April 9, 2008

Turn an Error Message into a SQL Statement

2008-03-20 15:39:07,946 ERROR [com.tririga.design.smartobject.dataaccess.GenerateSqls](ExecuteThread: '13' for queue: 'weblogic.kernel.Default') Statement : update T_TRISERVICEREQUEST set sys_projectid = 1,triDescriptionTX = ?,triStatusCL = ?,triStatusCLObjId = ?,triControlNumberCN = ?,Sys_Parent1 = ?,triPreviousStatusCL = ?,triPreviousStatusCLObjId = ?,triRequestClassCL = ?,triRequestClassCLObjId = ?,triModifiedSY = ?,coContactNumberTX = ?,triRecordNameSY = ?,triRecordStateSY = ?,triModifiedByTX = ?,triModifiedByTXObjId = ?,triRequestFullNameTX = ? where spec_id = ?

Values :

test4,

Review In Progress,

1601066,

REQ 1000516,

0,

Draft,

1600256,

CRE Moves, ten or less associates (MOVES03),

4737839,

03/20/2008 03:39:07 PM,

xxx,

REQ 1000516-0,

triReview,

Zombron, Robert - eyk343,

3835835,

REQ 1000516-CRE Moves, ten or less associates (MOVES03),

6564922,

To troubleshoot why this error message was thrown, turn it into a SQL statement like the one below, run it in SQLPlus and use your SQL troubleshooting skills to solve the problem. In this example. the triRequestFullNameTX field was set to 50 characters but the data we were trying to push had 55 characters. The solution was simply expanding the percision of the offending field to a number larger then 55.

update T_TRISERVICEREQUEST

set sys_projectid = 1,

triDescriptionTX = 'test4',

triStatusCL = 'Review In Progress',

triStatusCLObjId = 1601066,

triControlNumberCN = 'REQ 1000516',

Sys_Parent1 = 0,

triPreviousStatusCL = 'Draft',

triPreviousStatusCLObjId = 1600256,

triRequestClassCL = 'CRE Moves, ten or less associates (MOVES03)',

triRequestClassCLObjId = 4737839,

triModifiedSY = '03/20/2008 03:39:07 PM', -- NOTE may need to be formatted correctly for this column

coContactNumberTX = 'xxx',

triRecordNameSY = 'REQ 1000516-0',

triRecordStateSY = 'triReview',

triModifiedByTX = 'Zombron, Robert - eyk343',

triModifiedByTXObjId = 3835835,

triRequestFullNameTX = 'REQ 1000516-CRE Moves, ten or less associates (MOVES03)'

where spec_id = 6564922

Thursday, April 3, 2008

Site Style Manager Tip

Wednesday, March 26, 2008

General Troubleshooting

- Look for a pattern. Can you recreate what just happened?

- Look in the server.log (and output.log for Weblogic). Do you see any strange error messages? (I will post a future blog entry for troubleshooting log messages)

- If the problem appears to be with workflows, turn on workflow logging and look at every workflow that fires. Many workflows call other workflows, so this could take a few iterations.

- Are the issues related to performance? If you have access to a DBA, ask them to help identify the long running SQL queries and run and explain plan. Look at my posting about analyzing long running SQL queries.

- If the issues are related to specific records in the database, use TOAD (or a similar tool) to compare good records with bad records in both the T_BONAME table and IBS_SPEC table. Are there any inconsistencies between good and bad records?

- Are objects stuck in a "bad" state? Use TOAD to be sure the sys_objectstate in the T_BONAME table and object_state in the IBS_SPEC table - be sure these match. These field can be updated to put the object into a valid state.

- Are fields not getting filled out by workflow as expected? Check the field length of the desination fields to be sure you're not trying to push 150 characters into a 100 character field

Monday, March 17, 2008

Object Name Exists Error Message

select count(*), type_name from IBS_SPEC

where spec_name is null

group by type_name

order by count(*) desc

Fixing Objects Stuck in the Wrong State

Friday, March 14, 2008

Search For Orphan Associations

SELECT ist.NAME "BO Name", ist.spec_template_id "BO ID",

COUNT (1) "Orphan Count"

FROM (SELECT DISTINCT spec_id, spec_template_id

FROM (SELECT isa1.spec_id, isa1.spec_template_id

FROM ibs_spec_assignments isa1

WHERE NOT EXISTS (

SELECT 1

FROM ibs_spec ibs1

WHERE ibs1.spec_id =

isa1.spec_id)

UNION ALL

SELECT isa2.ass_spec_id, isa2.ass_spec_template_id

FROM ibs_spec_assignments isa2

WHERE NOT EXISTS (

SELECT 1

FROM ibs_spec ibs2

WHERE ibs2.spec_id =

isa2.ass_spec_id))) orphans

INNER JOIN

ibs_spec_type ist ON ist.spec_template_id = orphans.spec_template_id

GROUP BY ist.NAME, ist.spec_template_id

ORDER BY 3 DESC;

Momentum Migration Fails on Validation

the validation phase (server.log file)

indicates "Validating row 18000/400000" or similar) then this

is the script to run as user TRIRIGA on the database:

UPDATE ENVIRONMENT_PROPERTIES SET VALUE = 'false' WHERE

ENVIRONMENT = 'SYSTEM' AND PROPERTY = 'LOCK';

COMMIT;

Then, if necessary, kill the java processes on app server (killall -KILL

java) and restart Jboss/weblogic instance; it will skip the

validation step and go on.

Tuesday, March 4, 2008

Monitoring System Workflows

INSERT INTO user_credentials

(user_id, company_id, user_account, admin_console_permissions)

VALUES

(0, 208133, 'Crazy User', 0);

Tuesday, February 26, 2008

Monitoring Workflows

--Workflows Created per Hour (in the queue to be processed)

select count(*), to_char(created_date, 'mm/dd/yyyy HH24') from wf_event

group by to_char(created_date, 'mm/dd/yyyy HH24');

--Workflows Processed per Hour

select count(*), to_char(completed_date, 'mm/dd/yyyy HH24') from wf_event_history

group by to_char(completed_date, 'mm/dd/yyyy HH24');

Tuesday, February 5, 2008

Rebuilding Oracle Database Indexes

Simulated SMTP Server

Setup

Setting it up is easy. Create a folder on your PC, for example c:\smtp. Copy the smtp.jar file to your new folder and at a command prompt navigate to the new folder and run the following: C:\SMTP>java -jar smtp.jar. Leave the command prompt box open.

The final step is setting your TRIRIGA application to use your PC as it's SMTP server. Edit the tririgaweb.properties file (or use the System Manager in the Admin Console) and set mail.smtp.host = your local IP address. All that left is to restart JBoss/Weblogic so the application uses the new settings.

Usage

Once everything is set up as described above usage is pretty straightforward. Use the application to trigger a notification (close a work task, etc.) and watch your command window. You will see log entries each time the system receives a message. It should look similar to this:

C:\SMTP>java -jar smtp.jar

Starting session with /10.119.216.152

Closing session with /10.119.216.152

Now open Windows Explorer and navigate to the C:\SMTP directory. In addition to the smtp.jar file you should have two new files, xxxxxxxxx-x.log and xxxxxxxxx.mht. The log file show the raw text of the email message and the mht shows the formated text. You can open the mht file is a browser to look at the content, or change the .mht extention to .eml and open the file in Outlook Express.

Wednesday, January 30, 2008

Troubleshooting Workflow Performance

Have you ever had some stuff that was taking a long-time to run, and wanted to figure out where it was spending it's time. These SQL statements may help you figure it out. You need to change the value of the date string to the point in time you want to start tracking...

-- ----------------------------------------------------------------------

-- Lists all workflows instances that have fired since a given date and

-- time, in the order they fired along with their status

-- ----------------------------------------------------------------------

select wf_name, bo_type_name, bo_event_name,

case

when status_id = 1 then 'In Progress'

when status_id = 40 then 'Running'

when status_id = 90 then 'Stopped'

when status_id = 100 then 'Completed'

else 'Unknown'

end as status

,start_date, end_date

from wf_template

where template_flag not in (10,20,25,30) -- 10,20,25,30 are templates, everything else is an instance

--and status_id <> 100 -- 100 are completed, so uncomment this to see only those that haven't finished running

and created_date > to_date('01/29/2008 01:23:45 PM', 'MM/DD/YYYY HH:MI:SS AM')

order by created_date asc

-- ----------------------------------------------------------------------

-- Lists workflows instances that have fired since a given time, along

-- with how long it took for them to process

-- ----------------------------------------------------------------------

select wf_name, bo_type_name, bo_event_name, trunc(86400*(end_date-start_date))-60*(trunc((86400*(end_date-start_date))/60)) as processing_time_secs

from wf_template

where template_flag not in (10,20,25,30) -- 10,20,25,30 are templates

and created_date > to_date('01/29/2008 01:23:45 PM', 'MM/DD/YYYY HH:MI:SS AM')

order by 4 desc

-- ----------------------------------------------------------------------

-- Gives a average processing time and number of times a given workflow

-- (by name) has fired since a specified date and time

-- ----------------------------------------------------------------------

select wf_name, count(*), avg(trunc(86400*(end_date-start_date))-60*(trunc((86400*(end_date-start_date))/60))) as processing_time_secs

from wf_template

where template_flag not in (10,20,25,30) -- 10,20,25,30 are templates

and created_date > to_date('01/29/2008 01:23:45 PM', 'MM/DD/YYYY HH:MI:SS AM')

group by wf_name

order by 3 desc

Thursday, January 24, 2008

Bypassing the TRIRIGA 9 Approval Process

Using assets as an example, most of my clients never use the out-of-box approval process for activating an asset. Here's what I do to bypass the approval process

1. Retire Workflow: triAsset - triActivate - Submit for Approval and Dependant Record Update

2. Modify Workflow: triAsset - triActivate - Make Dependant Records Read Only (add a step at the end of the workflow to have it call triFinalApprovalHidden)

By doing this your are calling executing the hidden approval step without creating any of the intermediate approval objects. It is also easy to put this back in place if you choose to use the approval process in the future, simply reverse the process. The workflows for most objects have the same name as the one's above (except for the BO name) and the process to disable them is the same.

Additionally, most of my clients don't need or want any notifications on status change of an object. If you don't need a notification on any status change for a particular object (assets in this example), bypassing this process is easy, simply retire the following workflow: triAsset - Associate - triStatus - Create Notification Details current status. The saves the system from having to run this workflow for every status change of every object. To turn it back on, just republish the workflow.

Tuesday, January 22, 2008

IBS_SPEC_ASSIGNMENTS Table

Is your IBS_SPEC_ASSIGNMENTS table getting too large? One of my clients currently has 19 GB of data in over a quarter billion rows in this table alone. Here is some SQL to help analyze the situation:

This SQL Statement will determine what the top business objects and association types are in the ibs_spec_assignments table:

select spec_class_type, ass_type, count(*) as cnt

from ibs_spec_assignments

group by spec_class_type, ass_type

order by cnt desc

You may be able to narrow down some records to clean up from there. Also, you may want to find out what exists in the ibs_spec_assignments table that is no longer in the ibs_spec table:

select count(*) from ibs_spec_assignments where spec_id not in (select spec_id from ibs_spec)

and

select count(*) from ibs_spec_assignments where ass_spec_id not in (select spec_id from ibs_spec)

I removed the cleanup script that I used at the request of TRIRIGA. You can send me an email if you need more information.

Friday, January 18, 2008

Worklfow Cleanup Script

-- Workflow Instance information is in the following tables:

-- (many also have template information - so they can't simply be truncated)

--

-- TASK

-- TASK_ASSIGNEES

-- TASK_FILTER

-- TASK_RESULT_LIST

-- WF_CALLWF_RESULT_LIST

-- WF_CONDITION

-- WF_TEMPLATE_STEP

-- OBJECT_TYPE_MAP

-- WF_STEP_INST

-- WF_INSTANCE

-- WF_TEMPLATE

--

-- Select WF Instances that ended at a point older than 'date' and are not active or waiting for a user.

-- SELECT WFT.* FROM WF_TEMPLATE WFT WHERE WFT.TEMPLATE_FLAG = 0 AND WFT.STATUS_ID NOT IN (40,60,61) AND WFT.END_DATE < version =" 0" version =" 0" wf_template_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_template_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_template_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_template_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_pid =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_template_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_template_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_template_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_inst_id =" WFT.WF_TEMPLATE_ID" template_flag =" 0" wf_pid =" WFT.WF_TEMPLATE_ID" template_flag =" 0" template_flag =" 0">

Suspending Workflow Instance Logging

-- ****************** BEFORE MIGRATION *********************------

-- Create backup of instance_data_flag values ------

-- Update all templates to set instance flag = false ------

--------------------------------------------------------------------------------------------

create table wf_template_bk as (

select wf_template_id, wf_template_version, instance_data_flag from wf_template where template_flag = 1);

create table wf_lookup_bk as (

select wf_template_id, wf_template_version, instance_data_flag from wf_lookup);

update wf_template t1 set t1.instance_data_flag = 0 where t1.template_flag = 1;

-- ****************** AFTER MIGRATION **********************------

-- Update from backup of instance_data_flag values ------

-- Drop backup tables ------

------------------------------------------------------------------------------------------

update wf_template t1 set t1.instance_data_flag = (select t2.instance_data_flag from wf_template_bk t2 where t1.wf_template_id = t2.wf_template_id and t1.wf_template_version = t2.wf_template_version)

where t1.template_flag = 1;

update wf_lookup t1 set t1.instance_data_flag = (select t2.instance_data_flag from wf_lookup_bk t2 where t1.wf_template_id = t2.wf_template_id and t1.wf_template_version = t2.wf_template_version);

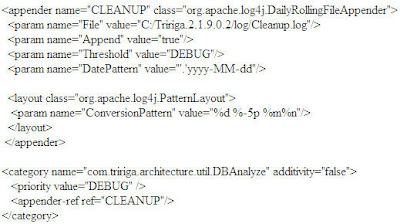

Monitoring the Cleanup Agent

Be sure to set the File Value parameter to point to the directory you want the log file to be created in. You should probably use the same path as the existing appender in the log4j file. You will probably need to restart Jboss/Weblogic for the change to take affect. The result will be a cleanup.log file that will show you messages similar to the ones below:

DEBUG DBAnalyze - Clean up - Setting Cleanup Batch size to 900

2008-01-17 20:04:41,963 DEBUG Deleted 900 runtime process only WF Templates and associatated data

2008-01-17 22:13:48,040 DEBUG Deleted 900 runtime process only WF Templates and associatated data

Wednesday, January 16, 2008

Analyzing Long Running SQL Queries

Consider the following SQL statement:

select t1.spec_id ,t1.SYS_TYPE1 , t1.triUserMessageFlagTX , t1.triIdTX ,

t1.triNameTX , t1.triIdTX , t1.triNameTX , t1.triRequestedByTX , t1.triCreatedSY ,

t1.triStatusCLOBJID from

M_TRIREQUEST t1 where t1.SYS_OBJECTID>0 and t1.SYS_TYPE1 = 1.0002583E7

and t1.SYS_PROJECTID = 1.0

and isnull(t1.triStatusCL, -9223372036854775808)

not in ('Retired','Upload Error','Template', 'History', 'Deleted')

and t1.spec_id in ( select ta1.ass_spec_id from ibs_spec_assignments ta1

where ta1.spec_id in (208133)

union select ta1.spec_id from

ibs_spec_assignments ta1 where

ta1.ass_spec_id in (208133) )

order by upper(t1.triIdTX) ,upper(t1.triNameTX) ,upper(t1.triIdTX) ,upper(t1.triNameTX)

Take a fairly unique column, triRequestedByTX for example, and find all the places that is being used in conjunction with the table/view name: M_TRIREQUEST by running the following SQL query:

select * from REP_TEMPLATE_COLUMNS where field_name = 'triRequestedByTX'

and table_name = 'M_TRIREQUEST'

Then, select the rep_template_id and find the names of the reports from the rep_template_hrd table

select rep_name from rep_template_hdr where rep_template_Id in (17879,25749)

This could be done in one statement like:

select rep_name, rep_template_id from REP_TEMPLATE_HDR where rep_template_id in

(

select distinct rep_template_id from REP_TEMPLATE_COLUMNS

where field_name = 'triRequestedByTX'

and table_name = 'M_TRIREQUEST'

)

Now, open TRIRIGA, go into report manager, click on “open” for any report. Press CTRL-N to open a new window, where you can edit the URL. Replace the report template id in the URL with the one provided above and the offending report will open.

Once you find the offending report you may want to reconsider how you have your filters set up, or you may want to remove it from a portal section or frequently used object. An alternative to removing it is to just remove it from the first tab of an object so it does not run as often.

Wednesday, January 9, 2008

WF_STEP_INST Table

1) active workflows

2) workflows with pending tasks (e.g. user tasks)

Unless you have tons of workflows with user tasks that are leaving the workflows active (and hence the instance data in the system) or have a lot of orphaned data, this table shouldn't have too many rows. Removing the check next to Save Instance Data in workflow start tasks reduces the number of rows inserted (and eventually deleted) from this table. This can be important when doing large data loads, as I have seen delete operations against this table become a bottleneck when the system is processing large quantities of workflows after a data load.

Monday, January 7, 2008

Tracking Data Integrator Loads

select ibs.created_date "STARTED_DATE",

ibs.updated_date "FINISHED_DATE",

ibs.object_state "STATUS",

mp.sys_useraccount "USER_ACCOUNT",

ibs.spec_name "UPLOAD_NAME"

from ibs_spec ibs, t_myprofile mp

where ibs.spec_template_id = 106548 and

ibs.created_by = mp.spec_id

order by 1 desc;

Friday, January 4, 2008

Scripts to Identify Object Changes

-- Business Objects (run in tridata schema)

select Updated_Date, s.name

from ibs_spec_type s

where updated_date > SYSDATE -1

order by s.name;

--Workflows (run in tririga schema)

select Updated_Date, data_value Module, wf_name

from wf_lookup w, list_value m

where updated_date > SYSDATE -1

and m.LIST_ID = 2

and m.list_value_id=w.module_id

order by m.data_value, w.wf_name;

--GUIs (run in tririga schema)

select Updated_Date, data_value Module, g.gui_name

from gui_header_publ g, list_value m

where updated_date > SYSDATE -1

and m.LIST_ID = 2

and m.list_value_id=g.spec_class_type

order by m.data_value, g.gui_name;

--Queries (run in tririga schema)

select Updated_Date, data_value Module, r.rep_name

from rep_template_hdr r, list_value m

where updated_date > SYSDATE -1

and m.LIST_ID = 2

and m.list_value_id=r.class_type_id

order by m.data_value, r.rep_name;

--Managers which have been modified

select Updated_Date, name Manager_Name from ibs_manager_description

where updated_date > SYSDATE -1

order by name;

--Portals (run against tririga schema)

select distinct Updated_Date, portal_name from user_portal

where updated_date > SYSDATE -1

order by portal_name;

--Portal Sections (run against tririga schema)

select updated_date, portal_section_name from portal_section

where updated_date > SYSDATE -1

order by portal_section_name;

Diplaying UOM Values on Form Reports

Thursday, January 3, 2008

System Usage Script

SELECT DISTINCT

user_credentials.user_account AS USERNAME,

session_history.start_time AS LOGIN

FROM

user_credentials, session_history

WHERE

user_credentials.user_id = session_history.user_id AND

session_history.start_time > (sysdate - 30)

UNION ALL

SELECT DISTINCT

user_credentials.user_account AS USERNAME,

active_session_user.start_time AS LOGIN

FROM

active_session_user, user_credentials

WHERE

user_credentials.user_id = active_session_user.user_id

ORDER BY 1, 2;

External Redirect

To get the URL to the form you would like to use simply go the the form in the application (i.e., open a new request), push the F11 key on your keyboard and copy the URL to your clipboard. It will look like the first example below. Then all you need to do is insert http://your_servername/html/en/default/admin/extRedirect.jsp?url= before the /WebProcess.srv?.... portion of the URL.

Instead of this:

Link to this:

Wednesday, January 2, 2008

Hosting HTML Files Locally

All you have to do is copy your html file to the /userfiles/company-1 directory and set your URL to look like this:

/getCompanyFile.jsp?fileLoc=//Company-1/myfile.htm

Data Cleanup Tool

http://ServerName:8001/html/en/default/datadictionary/dataCleanupUtility.jsp

Be very careful using this tool as it can cause some serious damage to your system. Here are a few comments from internal TRIRIGA sources:

“I would DEFINITELY make an export (backup) before running these scripts. They aren’t supported by Platform, so use AS IS (and I wouldn’t give them to a customer without permission).”

“Ok, so the cat's out of the bag so to speak, but please please please do not consider these clean scripts ready to be used without a thorough understanding of the scripts and the results they will have on your system. I encourage you to please contact a PA/TA to walk through these before you employ them. To xxxx's comment, obviously do not share with clients without explicit agreement from TS leadership and the PM on the project. These will delete pretty much all instance data in your system...please tread lightly.”

"Rumor has it that QA plans to support and document this utility….eventually. But, no word yet on when that may happen. So, unfortunately, everyone is on their own. You might try calling tech support and asking about it, but you’ll need to get past the first level tech support people….who will probably refuse to even talk to you about it."

Get a Count of all Objects in TRIRGA 9

This script should be run against your tridata schema.

SELECT

spec_template_id,

type_name,

COUNT(1) COUNT

FROM

ibs_spec

GROUP BY spec_template_id, type_name

ORDER BY 3 DESC