My client recently upgraded to the IBM TRIRIGA 3.5.1.3 platform. After the upgrade I found that certain forms were no longer available for selection in the Report Manager. Looking at Form Builder, you can clearly see that there are nine forms on the triPeople business object:

Switching to Report Manager only five of them show as available to add to a new report.

It turns out that when more than one form with the same label exists for the same business object, only one of them will display on the report form.

Friday, February 24, 2017

Monday, June 2, 2014

Workflow Instances and Associations

Have you ever wanted to take a peek at the Work Flow Instance tab or Associations tab on a single tab form, or on a form where they are not set to display? Here is an easy solution - pop open a new browser tab and paste the URL from below, updating your server name and port, and replacing the x's with the spec ID of the record.

URL to view workflow instances (where "xxxxx" is

the spec ID for the record):

http://YourServer:Port#/html/en/default/wfBuilder/wftInstanceListForRecord.jsp?SOId=xxxxx

URL to view associations:

http://YourServer:Port#/html/en/default/visualization/svgassociation/SvgAssociation.jsp?specId=xxxxx

Tuesday, May 27, 2014

Action Items Not Displaying

Here's an issue that I've seen a few times - the Reminders portal section shows a count of one or more action items assigned to a user, but when you drill into the Action Items portal section no action items display. The solution is to be sure that any workflows with User Action or Approval tasks use an associated My Profile record for the assignment, not a triPeople record. The out-of-box Action Items portal section (sysActionItems) only shows action items associated to a My Profile record.

Wednesday, May 22, 2013

Security Group Permissions Report

If you need to get a handle on your security groups I have a Crystal Report that displays your security details in a viewer-friendly table format. The data is set via SQL command, so the where clause at the end of the SQL can be modified to limit the date returned as needed. You can download the report here.

Wednesday, March 14, 2012

Undocumented Steps for Using BIRT

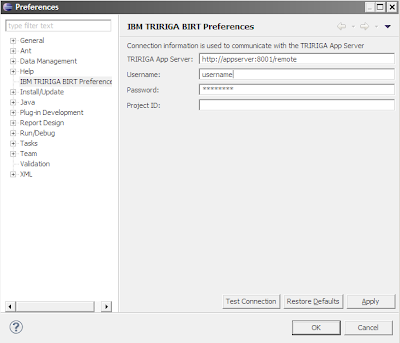

In order to use BIRT for report development in TRIRIGA, there are a few undocumented steps you will need to take to make it work. Start off by installing BIRT and the IBM TRIRIGA plug-in, using the Report User Guide for as a reference. You will then need to set the IBM TRIRIGA BIRT Preferences.

In the BIRT application menu, go to Window/Preferences and find the IBM TRIRIGA BIRT Preferences. Set the value for your TRIRIGA App Server to "http://servername:8001/remote", and include your username and password. Once this is done, click the "Test Connection" button and verify that you get a "Connected to TRIRIGA" message.

Another important step is to check the value set for FRONT_END_SERVER in your tririgaweb.properties file. This will typically be "appserver:8001", but may vary in your environment. If this value is not set correctly, the report will not display correctly in the application, you will probably see something that looks like the screenshot below.

Finally, once you have your first report built and packaged as a zip file (as documented in the Reporting User Guide), you will need to modify the archive file before uploading it to document manager. In order for TRIRIGA to read the files they need to be in the root of the zip file, as exported the zip file will not work. The easiest way to do this is copy the files out of zip file, delete any directories in the zip file and copy them back to the root of the zip file. If you don't do this, the report will display properly when you preview it in BIRT, but when you run it from the application you will get an error message.

Tuesday, August 16, 2011

Impact of Changing List Values

A couple of weeks ago I wrote about determining the impact of changing classification values so you can judge the impact of the change before you make a data change you will later regret. Similar to determining the impact of changing classification values, you can also determine the impact of changing list values to determine where else in the application a particular list is being used.

To determine which objects use a specific list, run the following SQL script (substituting the name of the list field you are looking for for the value in ATR_NAME below) :

select name from IBS_SPEC_TYPE

where SPEC_TEMPLATE_ID in (select SPEC_TEMPLATE_ID from IBS_SPEC_VALUE_META_DATA

where ATR_TYPE = 'List'

and ATR_NAME = 'triTaxPaidToLI')

The results of this query will show which business objects have a field (with the name specified in ATR_NAME above) that points to that list. Using this, you can go into the Data Modeler for each object, select the field that uses the list and click on 'Where Used'. This will pop up a window showing all GUI's, queries and workflows where the field is used. Pay special attention to workflows that show 'Workflow Condition' in the Action column - this will help identify workflows that may use specific classification values in their logic.

Wednesday, July 27, 2011

Impact of Changing Classification Values

You may find your users want you to remove/replace some classification values, but before you make the requested changes you need to determine if that classification is used anywhere else in the product and what the impact of changing it will be.

select name

from IBS_SPEC_TYPE

where spec_template_id in

(select spec_template_id from ibs_spec_value_meta_data

where atr_type = 'Classification' and

classification_root_name = 'Location Primary Use')

The results of this query will show which objects have a field that points to that classification root value. Using this, you can go into the Data Modeler for each object, select the field that uses the classification and click on 'Where Used'. This will pop up a window showing all GUI's, queries and workflows where the field is used. Pay special attention to workflows that show 'Workflow Condition' in the Action column - this will help identify workflows that may use specific classification values in their logic.

Subscribe to:

Posts (Atom)